Generative AI and large language models (LLMs) are spearheading the AI revolution and transforming industries and businesses. Partnering with an experienced team like Techsolvers is essential to implementing LLMs solutions successfully – our specialists are ready to help with your company’s generative AI needs.

Tailored Retrieval Augmeneted Generation (RAG)

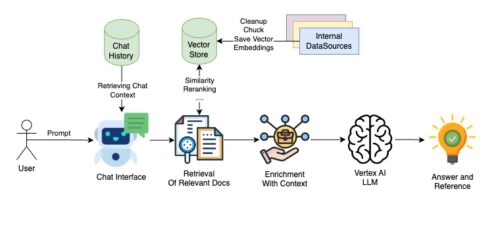

Retrieval-augmented generation is an AI framework for retrieving facts from an external knowledge base to ground large language models (LLMs) on the most accurate, up-to-date information and to give users insight into LLMs’ generative process.

- Chatbots and Virtual Assistants: RAG can be used to improve the conversational AI of chatbots and virtual assistants, enabling them to provide more accurate and helpful responses to user queries.

- Question Answering Systems: RAG can be used to improve question answering systems by combining the benefits of retrieval-based approaches, which retrieve relevant passages or documents, with generation-based models that produce detailed and accurate answer

- Education and Learning: RAG can enhance educational resources, generating personalized learning materials, practice problems, and assessments.

- Content Recommendation Systems: RAG can enhance content recommendation systems by combining retrieval of relevant content with generation of personalized recommendations. This approach can improve the system’s ability to suggest relevant articles, videos, products, or resources based on user preferences and context.

Techsolvers AI team has built multiple production grade RAG systems, for multiple customers.

We have built internal, and customer facing RAG systems, which are grounded on a realtime, up-to-data data, using Langchain, Llamaindex, Vector DBs, and multiple LLM combinations.